Check out what's new:

Future of edge and on-device AI in gaming

We look at the advancements in machine learning techniques and consumer devices that are driving the future of on-device AI and edge AI.

In our first post in this two part series, we explored the expected benefits of edge AI for gaming and looked at the current state of on-device AI. In this post, we’ll look at the advancements in machine learning (ML) techniques and consumer devices that are driving the future of on-device AI.

We’ll also touch on what the future of edge and on-device AI is expected to look like in gaming.

ML advancements that will make on-device AI possible

More advanced ML architectures, techniques, and learning methods are greatly improving model efficiency and quality at lower scales making it possible to create LLMs small enough to add advanced AI processing capabilities to consumer devices. While on-device models are still limited in what kinds of tasks these compressed models are able to perform at sufficient quality, future advancements and hardware improvements are expected to approximate the quality of current LLMs on device sometime in the coming years.

Model compression doesn’t just reduce the size and memory requirements for neural networks while maintaining their accuracy, it also helps improve the efficiency of models while reducing costs. Here are some of the ML advancements that are making smaller models possible.

New AI scaling hypotheses

For a long-time, bigger was thought to be better in large language model (LLM) research circles. Known as the AI Scaling Hypothesis, this theory challenged the previous belief among machine learning experts that algorithmic innovations were the core driver of AI breakthroughs and suggested that improvements might instead be generated by training models with increasingly larger computational resources and datasets.

That led to a race among tech companies to create increasingly massive models – and indeed did generate many groundbreaking improvements in AI capabilities and sophistication. The scaling rules based on this hypothesis that were used by OpenAI to train their models have been credited with improving the ability for GPT-3 and GPT-4 to learn implicitly and for reducing hallucinations. However, this drive for scale has resulted in massive models with higher latency, greater computational intensity, and higher costs – all factors that discourage the widespread adoption of generative AI in real-time applications like gaming and made local deployment of these models impossible.

But it turns out that there’s more than one way to achieve greater model sophistication. As early as 2016, SqueezeNet was able to achieve better accuracy than AlexNet with 50x fewer parameters and a model size of under 0.5 MB with different training techniques. In 2022, DeepMind’s Chinchilla project found that smaller models trained on more data were found to be better or comparable to larger models trained on less data when both models had the same amount of compute to leverage. Google’s LLaMA team took this even further in a paper published in 2023 that demonstrated that smaller models trained on even more data than Chinchilla’s initial optimal predictions performed even better.

Distillation

Knowledge distillation is a transfer learning method that can be deployed to make models smaller by removing parts of the model and teaching the smaller ‘student’ model to reproduce the outputs of a bigger ‘teacher’ model. Distillation can also be done using an unconnected larger model as a ‘trainer’ for the smaller model.

This strategy was validated in 2019 with DistilBert retaining 97% of BERT’s language understanding capabilities with a model that was 40% of it’s size and which ran 60% faster. More recent work by Google Research on distillation has shown that it’s possible to improve accuracy with up to 2000x smaller models and only 0.1% more of the training dataset. They were also able to outperform baseline fine-tuned models using fractions of their datasets. For example, they were able to create a 770 million parameter model which outperformed their 700x larger 540 billion parameter PaLM model on the ANLI benchmark using only 80% of the fine-tuning dataset.

Quantization

Quantization is a model compression technique with the goal of reducing the precision of weights in the neural network using fewer bits than their original precision. This typically involves reducing a model from 32 bits down to 2 bits per weight. This technique greatly reduces storage requirements, inference time, and computational needs. While quantization can result in some precision loss, overall model quantization techniques are able to significantly compress the model without significant model degradation.

Quantization can be enhanced via Quantization Aware Training (QAT) where the training method mimics inference-time quantization by using quantized values during the forward training pass. This approach can lead to greater accuracy preservation as models learn to adapt to precision loss.

Pruning

Pruning is a model compression technique that involves removing parts of a model – including entire layers or attention heads – from a neural network to improve the model’s efficiency and reduce it’s size by reducing its complexity.

Pruning can be done in a number of ways with common techniques involving removing the smallest weights or connections in the model or the weights that have the least impact on model output. When pruning, the larger base model is trained then connections or weights are identified for pruning. Once those weights have been pruned, the pruned model is then fine-tuned to increase its accuracy to ensure it’s comparable with the base model. In 2023, a paper found that models can be pruned to at least 50% sparsity without retraining and with minimal accuracy loss.

Pruning can be done with the desired hardware capabilities in mind. In 2021, machine learning researchers created the Hardware-Aware Latency Pruning method with an aim at maximizing model accuracy while pruning with target constraints to optimize the pruning efficacy and accuracy and efficiency trade-offs.

Fine-tuning

For narrow use cases like gaming, general large language models are often more than you need. If you’re trying to, for example, create an NPC who can tell a good joke and carry on a conversation, they likely also don’t need to know how to code. Smaller, more specialized models adapted to a specific use case are often the better choice due to lower costs and faster inference.

Fine-tuning allows you to take a smaller model and fine tune it to your specific requirements. This process involves finding additional data and training the new model with it. However, given the expense and time involved in fine-tuning existing models, many opt to use LoRA (Low-Rank Adaptation of Large Language Models) – a fine-tuning technique that reduces the complexity, time, and expense of fine-tuning a model.

Parameter-efficient fine-tuning (PEFT) is another popular fine-tuning technique that reduces the computational expenses by fine-tuning a small subset of the models’ parameters while freezing the original weights of the base LLM.

AI adapters

AI adapters are helping improve the performance of smaller models without extensive retraining. They’re specialized modules with the capability to customize and enhance the efficiency and performance of models on new tasks on a smaller number of parameters allowing for faster training and lower memory requirements.

For example, an adapter could be used to modify a model trained to translate English into Spanish to learn how to translate Spanish into English effectively with just a small dataset of English and Spanish language pairs. Adapters can also be used to train AI agents on new skills to enable them to adapt better to gaming environments with minimal training.

Both cost effective and resource efficient, they’re crucial for enhancing the adaptability of smaller models without having to do extensive additional training or fine-tuning.

One popular technique for adapting models is via Low Rank Adaptation (LoRA), a method developed by a team of researchers at Microsoft to improve the performance of LLMs.

LoRA is able to reduce the number of trainable parameters of models by up to 98% to reduce the training costs and improve performance. These changes in the LoRA adapter are then added to the original values of the model to create a fine-tuned model – or can be run via the LoRA model on-device since they’re typically 2% of the size of base models and are able to work within the constraints of limited device storage and DRAM.

Quantized Low-Rand Adaptation (QLoRA) takes LoRA even further by quantizing the precision of the weight parameters to 4-bit precision to reduce the memory footprint of the model to allow it to work on less powerful devices.

On-device fine-tuning

With more personalized, on-device models, there’s opportunities to do additional fine-tuning on a user’s device to optimize the model for the user’s needs. For example, models might be fine-tuned to better understand a user’s accent or the next gaming command they’re likely to make.

This process uses on-device data to customize the model and was previously done by uploading data to the cloud. However, researchers from MIT, the MIT-IBM Watson AI Lab, and other institutions developed a technique in 2023 that allows deep-learning models to be fine-tuned with new data directly on the device.

This training method, called PockEngine, identifies which parts of the model need to be finetuned and then performs the finetuning while the model is being prepared. This method of on-device training was found to be 15 times faster than other similar methods and led to significant model accuracy improvements.

Efficient on-device fine tuning methods reduce the amount of fine tuning required before the model reaches the customer since the model must only adapt for one use rather than any possible type of user. Fine tuning also improves the model accuracy, efficiency and latency – while making smaller models perform better on device.

Hardware advancements that will make on-device gaming

The consumerization of AI via consumer devices is accelerating with many tech companies releasing AI devices in 2024 or announcing them. That shift is one that the gaming industry will be able to take advantage of.

We cover the emerging AI capabilities to expect across key gaming device segments next.

Mobile

Mobile is fast becoming the device where local AI integration is seen as critical due to the ways a local, personalized AI model consumers always have available could be beneficial. The International Data Corporation (IDC) predicts that GenAI smartphone shipments will grow 364% in 2024 to 234.2 million units. By 2028, that number will increase to 912 million units.

Because of the popularity of mobile gaming, these AI functionalities also offer significant opportunities for studios to use them for everything from procedural content generation and dynamic in-game decisioning to on-device AI companions in mobile RPGs.

The next generation of smartphones will be supercharged with capabilities designed with AI processing in mind including NPUs with over 30 tera operations per second (TOPS) processing speeds and additional RAM to be able to host local language models. Many will also be sold with integrated AI models courtesy of the work that major mobile manufacturers are doing to advance the possibilities in on-device AI model development.

Many major mobile manufacturers have holistic edge AI strategies with both local and cloud AI capabilities and a contextually aware engine that determines whether to process the query locally or in the cloud based on the complexity of the question. The future of on-device AI for mobile involves hybrid deployments.

Here are what some of the major mobile manufacturers and mobile operating systems are working on:

- Apple: Apple was one of the first companies to add NPUs to their devices and many older iPhones have some AI capabilities. However, those NPUs are often optimized for image and sound processing and not natural language processing. Apple’s AI strategy going forward is to leverage hybrid AI models that combine on-device processing with cloud support for more complex tasks to optimize privacy and latency. Apple has also been building mobile-sized models with a focus on improving performance. In 2024, Apple released open-source on-device language models called OpenELM (open-source efficient language models).

- Qualcomm: Qualcomm has long been a leader in on-device AI with their Snapdragon chips and processors that have been successfully used in phones running on-device AI. They continue to work to improve on-device processing capabilities in their models. They also have an AI hub with over 75 plug-and-play mobile GenAI models that devs can leverage in mobile products that can run on their devices.

- Android: Google has a Gemini Nano model that can work locally on Android devices alongside its Pro and Flash models that process on the cloud. Android is focused on how these new capabilities can reinvent what phones can do. Android is particularly focused on bringing multimodal capabilities on-device with models able to understand sights, sounds, and spoken language in addition to text. Android allows developers to use their on-device Nano model for on-device inference for their apps or games.

PC & Macs

The next generation of PCs and Macs are also expected to have significant AI processing capabilities that will enable new kinds of on-device AI gaming. The IDC forecasts that in 2024 nearly 50 million personal computers will be shipped that have system-on-a-chip (SoC) capabilities that are designed to run generative AI locally. By 2027, that number will increase to 167 million computers and represent 60% of all PC shipments.

While there will undoubtedly be AI capabilities integrated into gaming computers, much of the shift to AI hardware is being motivated by business use cases. Microsoft, for example, is focused on integrating Microsoft Copilot into PCs for assistant use cases. Similarly, the key use cases Intel and Apple are talking about are related to work-related tasks that can be done on-device. However, those capabilities present a key opportunity to develop AI gaming experiences on-device.

Here are what some of the major manufacturers are working on:

- Microsoft: Microsoft is working with manufacturers like Acer, ASUS, Dell, HP, Lenovo, Samsung and others to create Copilot+ PCs that are capable of 40+ TOPS. These PCs will be able to process many AI requests locally with Microsoft leveraging OpenAI supported models to do so. The computers will be able to do things like refine AI images, translate audio, and create live captions via AI models run on-device.

- Intel: Intel is working on AI PCs that handle AI workloads via on-device CPUs, GPUs, and NPUs to more efficiently process AI requests. Powered with Intel Core Ultra processors, they’ll be able to do things like summarize meeting transcripts, create drafts, and enable facial recognition on device.

- Apple: Apple will implement a hybrid approach to their MacBooks via on-device models and Private Cloud Compute to supplement their devices’ on-device capabilities on more complex requests.

Console

The gaming market best poised to take advantage of on-device AI might be consoles – but the roll out of AI-enabled devices will happen very differently than with mobile and PCs.

Rather than consumers slowly buying AI-enabled hardware over a period of several years, the shift to AI-enabled gaming could happen all at once since the next-gen consoles for Xbox and PlayStation are rumored to come with built-in NPUs. However, the next generation Xbox isn’t expected to be released until 2028 while the PlayStation 6 is expected as early as 2026 or 2027.

The next Xbox console is rumored to have a neural processing unit from Qualcomm or an AI co-pilot. These rumors align with updates from an Xbox exec in 2024 who suggested that their next generation console will feature one of the biggest technology leaps ever.

While there’s rumors that Sony PlayStation 6 will be released in 2026 or 2027, discovery from Microsoft’s recent Activision acquisition cases show that Xbox expects them to release it in 2028. While nothing has been confirmed by Sony, rumor has it it will have an NPU processor.

Other devices

While the consumerization of AI for gaming is set to roll out predominantly across mobile, PC/Mac, and consoles, on-device capabilities can expand to other devices that aren’t traditionally used for gaming but will be integrating AI capabilities in the future.

For example, Teslas now have on-board games and could potentially implement AI models that could be used for other car functions but which would be available for gaming. The consumerization of AI could open up a number of non-traditional markets for gaming.

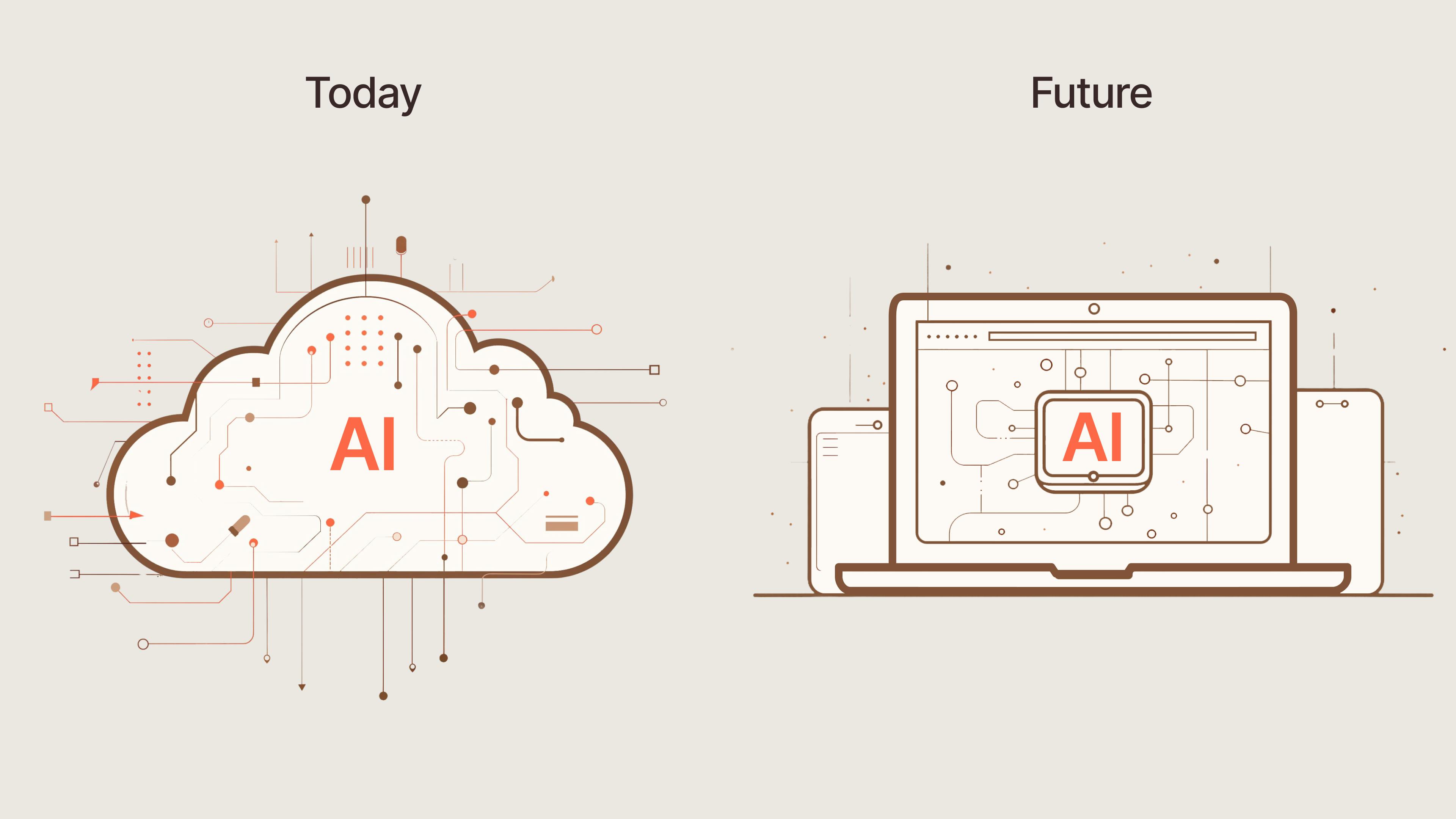

Future of on-device AI gaming

The next several years will be an exciting time for on-device AI and edge AI. With significant advances expected in both ML techniques and consumer gaming devices, fully on-device AI gaming experiences are coming. However, the near-term rollout of on-device gaming is likely to involve hybrid deployments to maximize latency and reduce costs.

Some AI inference tasks are currently able to be performed at comparable quality on-device – like Inworld and NVIDIA demonstrated at Computex this summer. However, even more AI tasks will be able to move on-device in the future. In the meantime, the latency and cost of running gaming AI in the cloud has consistently gone down since 2022 when ChatGPT was released. That downward pricing trajectory is expected to continue with additional improvements in quality and performance.

Read our first post to learn more about how we explored the current state of on-device AI in gaming and what the future of edge AI and on-device AI is expected to look like in gaming.

Interested in learning more?