Check out what's new:

Transforming gaming experiences with edge and on-device AI

We explore the current state of on-device AI in gaming and what the future of edge AI and on-device AI is expected to look like in gaming.

How far out is on-device AI gaming? Since ChatGPT went viral in 2022, generative AI has disrupted the video game industry. That’s led to AI-native games like Playroom’s Death by AI attracting 20 million players and industry heavyweights like Xbox, EA, and Ubisoft developing generative AI strategies for innovations in both gameplay and game development.

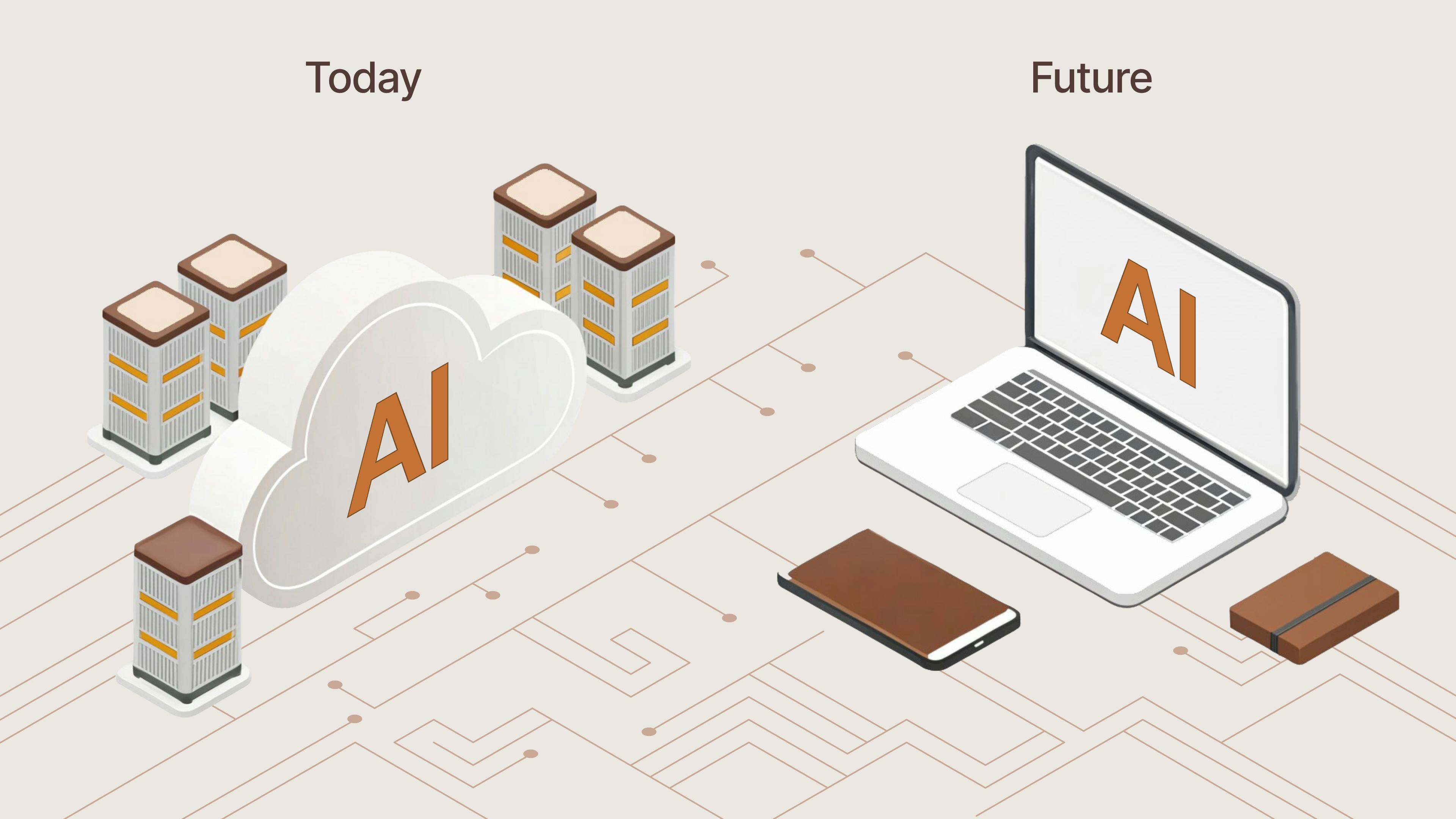

But the AI gaming revolution is still just beginning. With the coming democratization of generative AI through AI-enabled consumer devices and new techniques for model compression focused on increasing the capabilities of on-device AI, the use and impact of AI agents, AI NPCs, and large language models (LLMs) in gaming is expected to accelerate as the serving costs of AI go down.

On-device AI processing capabilities are expected to soon be standard across all categories of gaming devices – and to enable studios to integrate AI into more games.

- Leaks suggest Xbox and Sony’s next generation consoles will include neural processing units (NPUs).

- The International Data Corporation (IDC) predicts that GenAI smartphone shipments will grow 364% in 2024 to 234.2 million units. By 2028, that number will increase to 912 million units.

- The IDC also forecasts that in 2024 nearly 50 million personal computers will be shipped that have system-on-a-chip (SoC) capabilities that are designed to run generative AI locally. By 2027, that number will increase to 167 million computers and represent 60% of all PC shipments.

Combined with optimizations in generative AI models through advancements in areas like distillation, quantization, and new model architectures, these developments are expected to unlock new opportunities for edge, on-device, and hybrid generative AI deployments for gaming.

This post and the next in this two-part series will look at opportunities for leveraging those on-device capabilities, the current state of on-device AI in gaming, and what the future of edge and on-device AI is expected to look like in gaming.

What are the benefits of on-device AI in gaming?

Whether you want to use generative AI to power an autonomous AI companion who can respond dynamically in real-time (like PEA in NetEase’s Cygnus Enterprises), generate content via an LLM for a narrative-based party game (like Playroom’s Death by AI) or power AI agents to serve as game directors that can dynamically generate new branching narratives, on device offers a number of potential benefits over leveraging AI in the cloud.

In the future, on-device AI is expected to offer these benefits:

- Cost savings: By performing inference on-device, the cost of adding AI to a game for studios and players can be significantly reduced. On-device models don’t incur data transmission and server costs which creates savings that can be passed along to gamers.

- Reduced latency: Decentralized AI processing on an edge device greatly improves latency since the computation happens at the source rather than requiring that data be sent to the cloud for processing and then send it back to the device. In real-time use cases like gaming, the milliseconds saved are critical or making the experience feel more responsive and immersive.

- Offline gaming: Cloud-based AI doesn’t just increase latency but requires gamers to have an active high-speed internet connection on their device while playing. Any disruptions to internet connections could disrupt gameplay depending on how AI is integrated into the game.

- Privacy and personalization: Local processing ensures the data stays on the device and, therefore, provides better user privacy. Because data remains on-device and isn’t shared or stored by a publisher, studio, or third-party, additional player data could be used to improve the personalization of the experience that might raise privacy and security concerns if shared off-device.

What’s the current state of on-device AI?

While the future benefits of on-device AI are exciting, on-device AI functionalities for gaming are still in their early stages. Currently, it’s only possible to transfer certain features onto devices that can be run by smaller models without noticeable quality degradation.

Here’s what’s currently possible for on-device AI and the challenges to implementing on-device today:

Some small models are currently possible on-device

Significant advancements have been made in recent years towards distilling LLMs to the point where they can run on commercial devices. For example, in early 2023, Qualcomm AI Research deployed a 1 billion+ parameter Stable Diffusion model on an Android phone thanks to an optimized network model, LoRA adapters, a Snapdragon 8 Gen2 Mobile Platform chip, and Qualcomm’s Hexagon Processor.

That was one of the first generative AI models on a consumer mobile device but there’s been others deployed on-device since. That was only possible, though, because of an alignment between the model architecture and the hardware’s capabilities and isn’t currently a commercially viable AI deployment model because most consumers don’t have phones with AI-optimized chips and processors.

However, smaller and more niche models can currently run on-device. For example, at Computex in 2024 Inworld and NVIDIA demoed an on-device experience in which NVIDIA Riva for automatic speech recognition (ASR) and NVIDIA Audio2Face were deployed on-device on a NVIDIA RTX AI PC. Inworld was used to power the character responses in the cloud for this hybrid deployment but by processing some of the workload on-device NVIDIA was able to reduce latency and deployment costs.

Companies like Apple and Google are similarly focused on implementing on-device AI through hybrid deployments with small, niche local models that can process queries on-device and cloud support for more complex tasks. Meta is similarly working on smaller open source Llama models that can function on-device.

The capabilities of small on-device language models or small language models (SLMs), however, are currently rudimentary and their output quality wouldn’t be well-suited for more complex gaming use cases like powering NPC dialogue or acting as a game director. But they could work, for example, as a simplistic decisioning engine. Advancements in model size compression promise more sophisticated on-device models in the coming years capable of handling more complex tasks just as well as current models handle them in the cloud today.

Current device hardware

While Apple has been implementing neural processing units (NPUs) in their devices for years and other companies have been selling AI-optimized consumer devices, the NPUs in question are often optimized for AI image or sound processing and not for language model inference. That’s because photo quality has been a key selling factor for mobile devices for a long time but generative AI’s consumer natural language processing (NLP) and other applications that rely on the transformer neural network architecture only emerged in recent years. Also, currently graphics utilize most, if not all, existing GPU and NPU capacity on device.

However, since ChatGPT was released, tech companies have also been adding NPUs optimized for language models to many of their consumer devices with the intention that they be used to process language models in the future. It will take until 2027 or 2028 according to IDC’s predictions, however, before there is enough penetration in the market for a game created to run AI locally on these AI-enabled devices to have a broad audience.

Memory needs

Currently, another challenge to implementing generative AI on-device is the large storage needs of language models. The smallest models available currently require two gigabytes of RAM at minimum. Mobile devices typically have a minimum of four gigabytes of memory and can go up to 24 gigabytes for high-end phones but many widely sold devices have just 12 to 16 gigabytes. Similarly, many basic PCs only have four to 16 gigabytes of RAM total with gaming PCs typically having over 16 gigabytes.

That means that many gamers might not have enough available memory to run multiple models on-device. Given that many other apps are looking to implement their own on-device models as well, current devices are not expected to have enough RAM to properly run all the AI functionalities their users want. IDC predicts that 16GB of RAM will be the minimum requirement for AI smartphones in the future and expects to see a doubling of memory included in phones in the future. That will make AI smartphones more expensive.

One workaround could be for the tech companies selling the devices to add a model on-device and then allow app and game developers to train adapters to customize the core on-device model for their use case. This is something that Android is already allowing on an experimental basis with their Google Nano on-device model – but that solution might not be ideal depending on how well the base model fits the use case.

Current performance issues with smaller models

While it’s possible to process some requests locally on-device now, there can be a noticeable difference in the quality of the responses. Current techniques for reducing the size of LLMs can sacrifice the quality of the models in a variety of areas. However, it is often possible to finetune these smaller models for very specific tasks and see performance that’s on par or acceptable in quality. This is a process, however, that requires highly skilled machine learning expertise with experience distilling cloud models into on-device use cases – a skill most gaming companies don’t currently have in-house. With the high salary costs associated with in-house LLM expertise, this is a key part of the work that the industry is likely to outsource to companies like Inworld who can distill their model for their specific use case.

While in the future the quality of currently available cloud AI models will be able to be processed locally on-device, there will simultaneously be newer cloud AI models with even more advanced capabilities that could be accessed on the server side. Game devs will have to decide on a case-by-case basis whether on-device or cloud AI makes the most sense for their needs while weighing the benefits and drawbacks of both deployment methods.

Expect to see a lot of developers choose a hybrid deployment model similar to what NVIDIA did with Covert Protocol at Computex with some inference happening on-device while additional processing happens simultaneously in the cloud in order to reduce latency and costs while ensuring that cutting-edge models are running the key functions.

Cloud AI latency and cost improvements

While all this work towards on-device AI continues, there’s also been breakthroughs in improving the inference latency of cloud AI and reducing the cost. AI models developed for real-time applications often currently have faster response times on the server side versus on-device.

There have also been significant cost efficiencies in generative AI since ChatGPT was launched in 2022. Currently, the cost of cloud AI is lower than what gamers have said they’re willing to pay for it. For example, our study of 1,000 gamers found that the average they were willing to pay for generative AI gaming functionalities was $10 a month and that nearly 50% of gamers were willing to pay between $11 and $20 per month.

What game devs should consider when deciding between cloud AI and on-device is how critical the gameplay function is that’s being run by AI. In some cases, higher quality AI will result in better sales and gameplay metrics and more than make up for its cost.

What’s more, on-device AI and hybrid AI deployments can take more development time to architect and require in-house machine learning expertise. Deploying server side is comparatively less expensive and will always give players access to the most cutting-edge models. That could make cloud or hybrid deployment models more popular in the future. Cloud deployment models have already been proven to be cost-efficient and performant in real-time gaming use cases.

See how Playroom successfully deployed Inworld in the cloud to 20 million players.

Future of edge AI and on-device AI in gaming

Curious about what the future of edge AI and on-device AI will look like? In the next post in this series, we’ll explore the advancements in machine learning (ML) architecture and consumer devices that are making on-device and edge AI possible. We’ll also look at the impact that will have on gaming.

Interested in on-device or hybrid deployments?