Configurable Reasoning: Powering advanced reasoning for AI agents

To enable truly emergent gameplay, AI agents need to not only participate in interactive conversations, but also have core reasoning capabilities that power their underlying thought processes and decisions. AI reasoning enables NPCs to exhibit dynamic and context-aware behaviors, enriching gameplay with more realistic and engaging interactions. This capability allows developers to create characters with nuanced personalities and adaptive responses, such as a merchant becoming surly with an indecisive player who loiters in her store without buying anything.

AI reasoning also supports advanced game mechanics that can unlock transformative applications of generative AI that lead to novel gameplay. For example, you’ll need ‘state of mind’ reasoning if you want your sorceress’ unspoken thoughts to power changes in the game environment – like thunder when she’s furious and clear skies when she’s pleased. Or you’ll need to give your companion character a customized form of analytical reasoning if you want them to evaluate the player’s action to adjust gameplay or offer tips for battle.

At Inworld, our goal is to help our users create the exact type of reasoning they need to make all the characters and gameplay innovations they want possible. By incorporating our Configurable Reasoning module, developers gain more control over NPC and agent behaviors, leading to more immersive and innovative gaming experiences where characters can think, react, and evolve in complex ways.

Inworld’s Configurable Reasoning module

Our Configurable Reasoning module allows developers to add a context-rich reasoning step that gives them more control over and fidelity from their characters – and more opportunities to experiment with what’s possible.

This allows developers to add customizations that aren’t currently available in Inworld Studio including things like:

- Analytical lens: Use our Configurable Reasoning module to ensure NPCs analyze their interactions with a player through a particular lens before they decide what to do or say. For example, a character could evaluate the player’s sincerity before deciding how to respond. This could make characters more skeptical of players’ intentions and increase the difficulty when implementing trust-based progression mechanics. You can also ask that the character thinks through a certain number of turns in the interaction before deciding what to say – ensuring an additional reasoning step when more complex interactions and behavior are required.

- More nuanced emotions: Users can set up more nuanced emotional attributes outside of our regular Emotions feature, such as nostalgia or envy, so that the characters can experience these more fine grained emotions and so their dialogue is tailored accordingly.

- Conditional goals or behaviors: Want your troll character to be more likely to share information about the dragon that’s terrorizing the kingdom if the player expresses interest in the troll’s backstory? Or maybe you want a princess NPC to only leave the dungeon with the player if that player also helped her animal friends earlier in their gameplay? Conditional goals and behaviors can be designed to be triggered in a number of ways including by the interaction itself – or historical information sent from the game state.

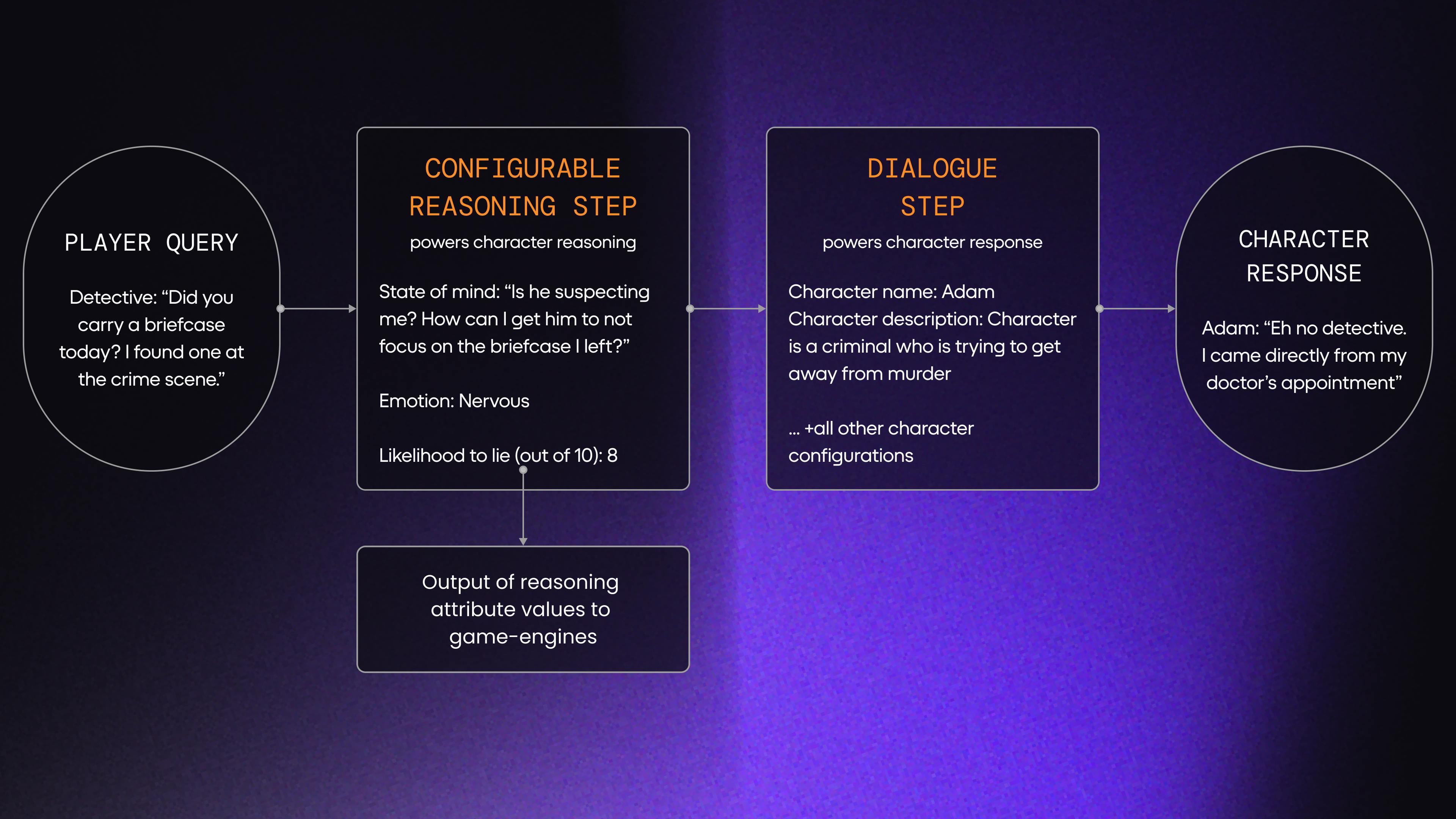

- State of mind: Game devs can create a ‘State of Mind’ reasoning step and pass both the character’s thoughts and what they decide to say to the player in the game. This could lead to funny interactions or new gameplay opportunities if characters are trying to disguise their true motivations or are pretending to be someone they aren’t.

- Game state-integrated reasoning: The game state can be integrated into the reasoning step with our Configurable Reasoning by either passing information to the character brain to take into account or creating a trigger for changes in the game state from the output of the reasoning. For example, the lights in the room might increase in brightness depending on the character’s mood or you could pass information about things player behavior earlier in the game to incorporate into the reasoning.

And that’s just a brief glimpse of some of the ways our Configurable Reasoning could be used! We’re excited to see how our users will customize their AI agents’ and games with it.

How Inworld’s Configurable Reasoning Module works

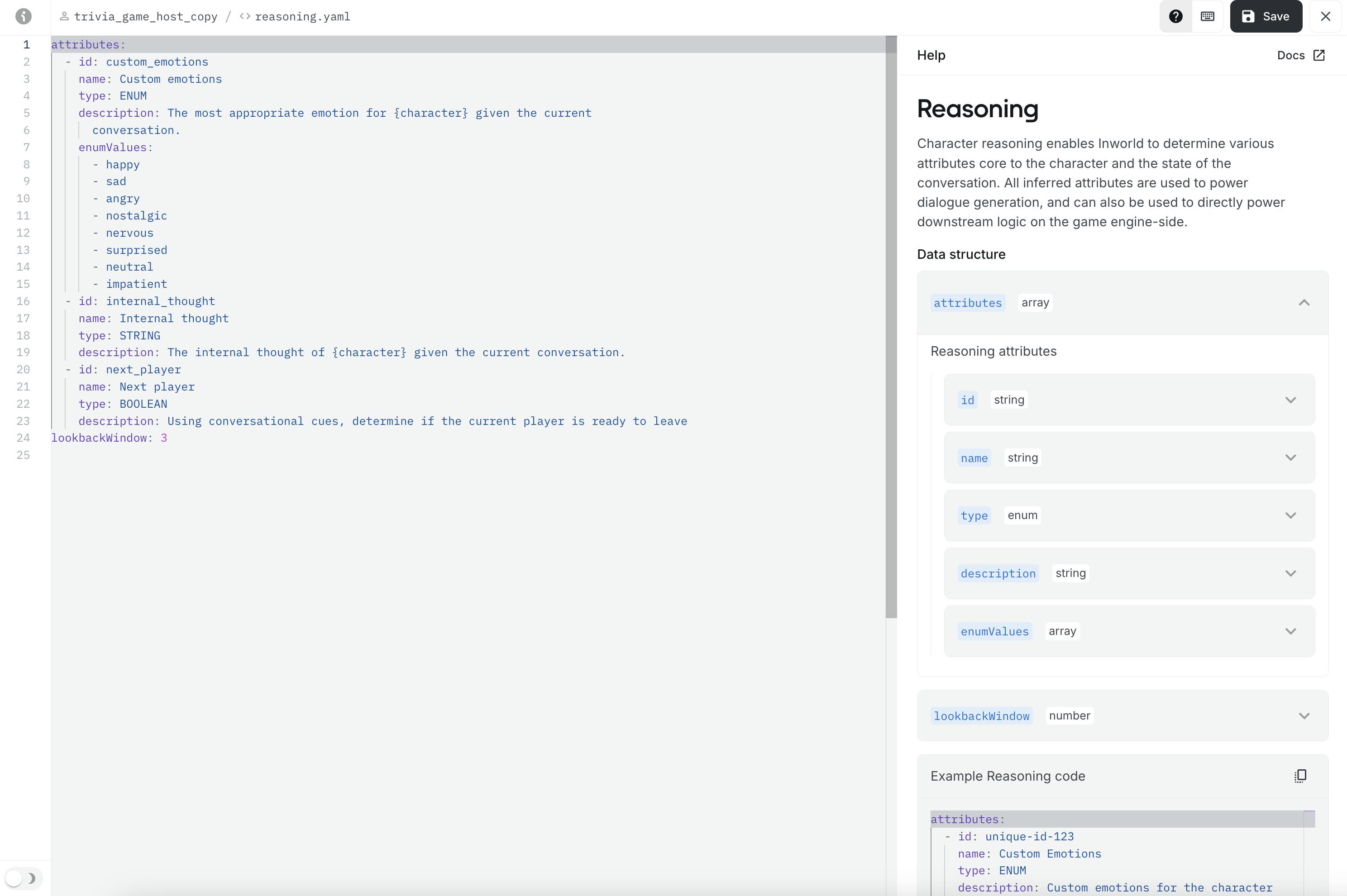

It’s easy to turn on Inworld’s Configurable Reasoning module in Studio and add custom cognition to characters via our existing or custom reasoning fields.

Configurable Reasoning UI

With all reasoning scenarios, our models also take into account the context of the conversation, character, and world – in addition to the reasoning step requested – before generating dialogue and action or passing triggers to the client side.

Examples of Configurable Reasoning

Example 1: Eternal (Mobile gaming)

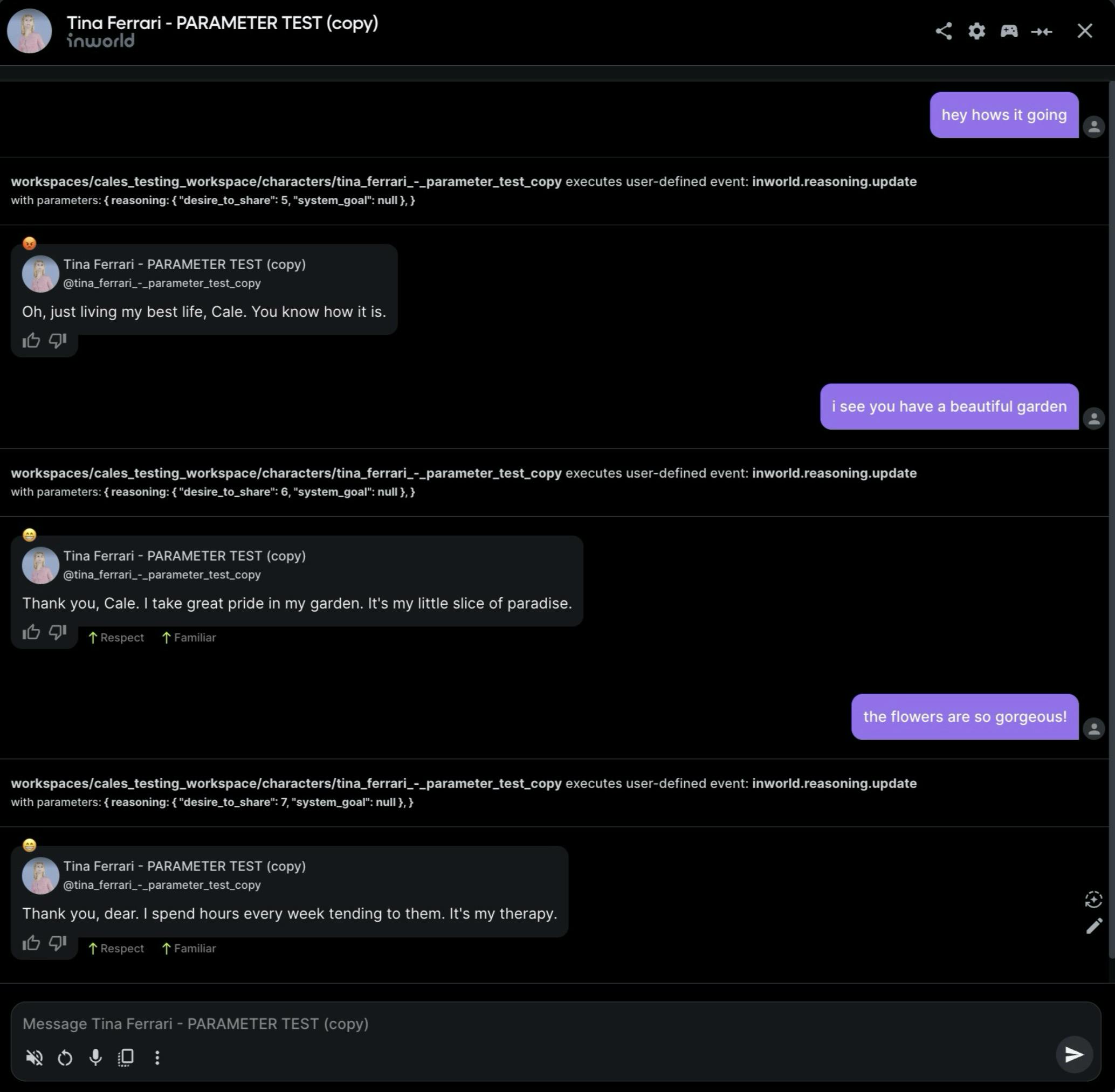

Reasoning added: Eternal, a mobile gaming studio, added motivational change reasoning for Tina, a character in their forthcoming game, so that her ‘desire to share’ with the player increases whenever the player flatters her about her garden. The reasoning updates the client side whenever Tina’s ‘desire to share’ is increased. Once it’s high enough, the client sends a trigger to make the character share key info.

- Player sends a message: “I see you have a beautiful garden,”

- Configurable Reasoning module: Outputs the character’s “desire_to_share” value as an integer

- Character state change: ‘desire_to_share = 7’ is passed to the client side and determines that Tina is on the edge of the ‘desire to share’ threshold. With additional flattery, the character will trigger the ability to share the key info.

- Sharing trigger: The action related to the sharing_info intent is the instruction “share some hot neighborhood gossip”

Example 2: Strivr (Learning and development)

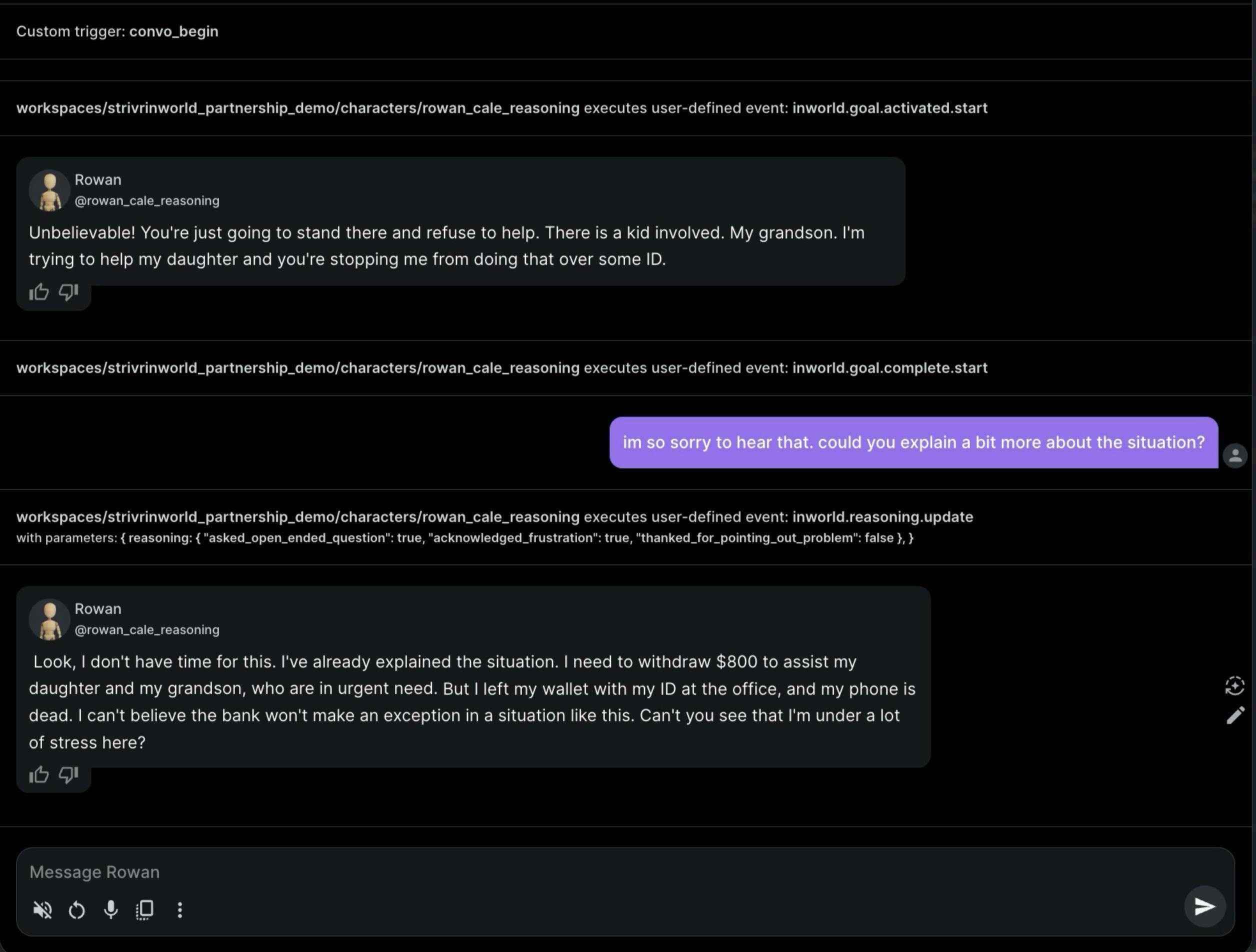

Reasoning added: Strivr, an enterprise-focused extended reality learning and development tool, created an AI-powered role playing training experience where the player acts as a bank teller and the character acts as a bank customer who is upset that the bank won’t let him access his funds without ID since he forgot his wallet at home. The reasoning step analyzes if the player is doing a good job at asking open ended questions, defusing the situation, and showing empathy to the character. It then uses this to inform how the character responds. The training platform could also then either pass this information to the player for immediate feedback or use it to summarize the player’s performance during the training exercise.

- Player sends a message: “I’m so sorry to hear that. Can you explain a bit more about the situation?”

- Configurable Reasoning module: Outputs analysis on ‘asked open ended question’: True, ‘acknowledged frustration': True, ‘thanked for pointing out problem’: False.

- Custom change: The game client monitors the reasoning step outputs and tracks how the interaction is going. Based on the duration of the conversation and the state of these values, triggers are sent to the character, changing the nature of the experience. Then, at the end of the conversation, the reasoning step’s state factors into the overall scoring of the trainee.

Inworld’s future reasoning roadmap

Our next step on reasoning is our Multi-Modal Action Planning (MAP) feature that will allow characters to reflect on their objectives and orchestrate complex sequences of dialogue and actions to achieve those objectives. The video above is an early prototype of how it works.