Check out what's new:

How NetEase integrated Inworld in Cygnus Enterprises, Part 1

Team Miaozi (NetEase) walks through how they integrated Inworld into Cygnus Enterprises’ in this two part series. Part 1 focuses on the technical implementation.

Earlier this year, Team Miaozi (NetEase) decided to add AI NPCs to Cygnus Enterprises, the company’s Early Access release. The game, an Action RPG with sandbox base management elements, has a sassy and sarcastic companion named PEA (short for Personal Electronic Assistant) that they thought would be the perfect NPC to bring to life with AI.

With all the ChatGPT-fueled excitement in early 2023 around how generative AI could revolutionize NPC interactions and behavior, the game’s designers, who have credits on games like Far Cry 2, Halo Wars, and Sea of Thieves. wanted to experiment with the tech.

“We wanted to be the first to launch a commercial game with AI NPCs on Steam,” said Oscar Lopez, Cygnus Enterprises’ Senior Product Project Manager. “Our team sees AI NPCs as the next big revolution in video games.”

But the 50 person team didn’t just want to change how characters could speak to NPCs, they also wanted to test ways generative AI could fundamentally change gameplay. So, they set about fully integrating PEA into the game's loops, developing novel game dynamics, and enabling voice commands for things like gameplay actions.

During the three months they worked on the project, the NetEase team had to make choices about how to integrate AI into their game world and faced challenges in building the AI version of PEA. In the end, they found innovative solutions that resulted in a truly unique and engaging game.

In this two-part series, we’ll walk you through the choices and technical challenges they faced – and show you how they created their groundbreaking game. In the first part, you’ll find out about the game place and technical choices they made and, in the second part, we'll cover their approach to narrative design with AI NPCs You can also check out our Case Study to learn more about why Team Miaozi chose the Inworld Character Engine to power their NPCs.

Details

- Engine: Unity

- Implementation: Post-launch

- Genre: Action RPG/Base management

- Team size: 50 people

- Time: 3 months

- Try it out: Steam

Game narrative & game loops

Before we dive into the specifics of NetEase’s integration, here’s some background you should know about the game.

Cygnus Enterprises is a top-down shooter set in the far future, after humanity has colonized nearby solar systems. The game's namesake is a mega-corporation that provides faster-than-light travel ships to settlers, allowing people and resources to move between settlements. The player takes on the role of a contractor on a derelict outpost located on a frontier planet. The player’s goal? Turn the outpost you arrive at into a populous colony.

“The game is designed so each combat mission gives players what they need to build and maintain the base to bring that outpost that much closer to becoming a thriving settlement,” said Brian Cox, the game’s Lead Programmer and a AAA veteran with credits on Far Cry 2, Halo Wars, and Sea of Thieves.

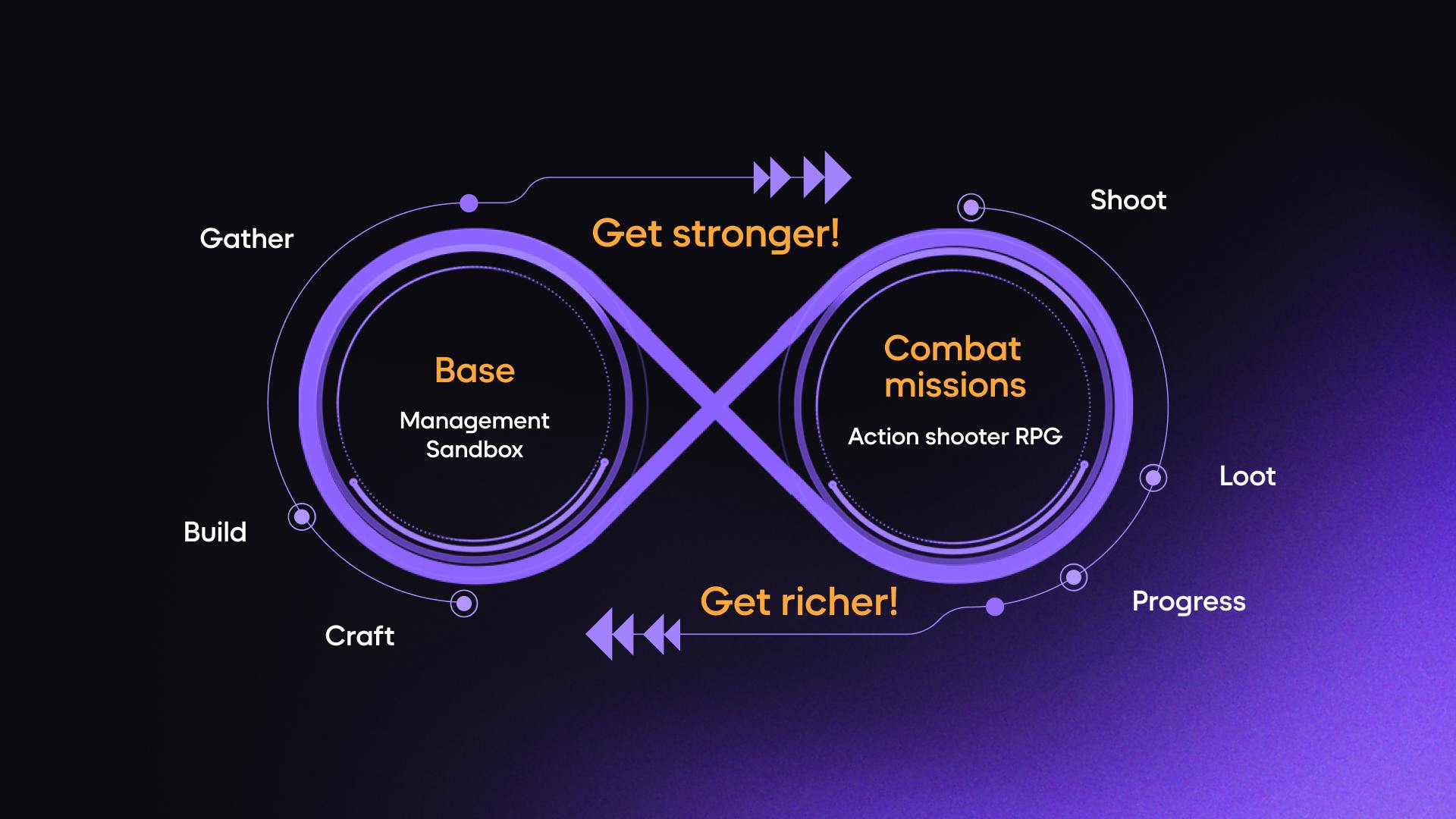

Cygnus Enterprises has two main game loops:

- Combat missions: Shoot → Loot → Progress

- Base Management: Gather → Build → Craft

Gameplay implementation choices

NetEase’s development team wanted to do innovative things with Cygnus Enterprise’s gameplay with the help of AI NPCs. Here are the implementation choices they made.

Goals & Actions with situational awareness

Setup of dynamic intent recognition with dynamic parameters

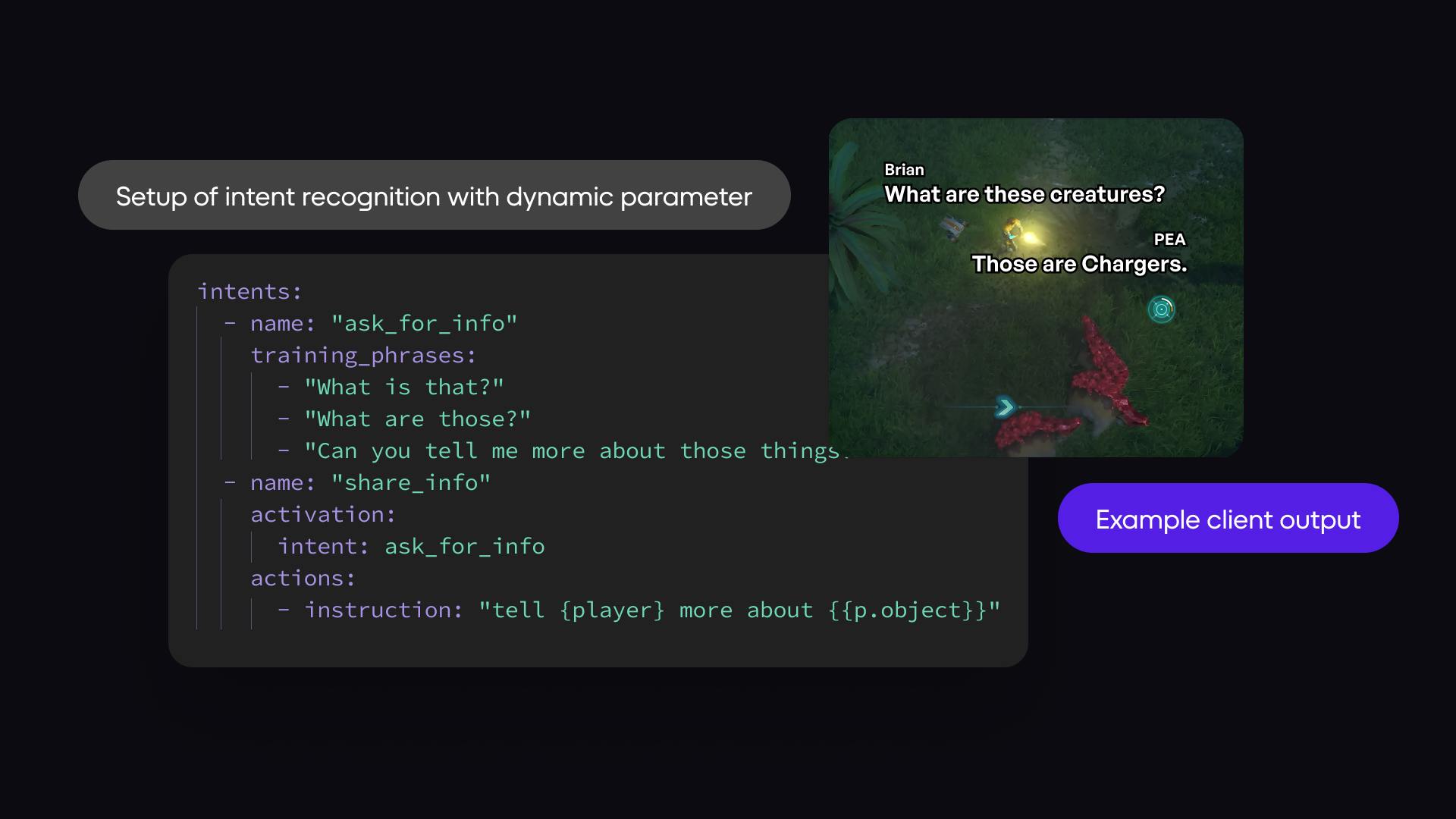

Inworld’s Goals and Actions feature is a powerful tool that allows developers to program their NPCs with new kinds of action or dialogue-based triggers. Game devs, for example, can specify the goals that an NPC wants to achieve, as well as the actions that the NPC will take to achieve those goals.

For example, if an NPC is programmed to have a goal of telling the player about a quest they need to complete, the character will look for the first opportunity in a conversation with the player and tell them about it. NPCs can also be programmed with goals triggered by the player mentioning something, doing something, or even achieving a certain level of trust with the character. In that case, the NPC will wait to tell the player about the thing in question or do the action programmed until they’ve triggered the goal.

This allows NPCs to behave in a much more realistic way, adapt to changing circumstances, and give help or information only if the player asks the right question or takes a particular action.

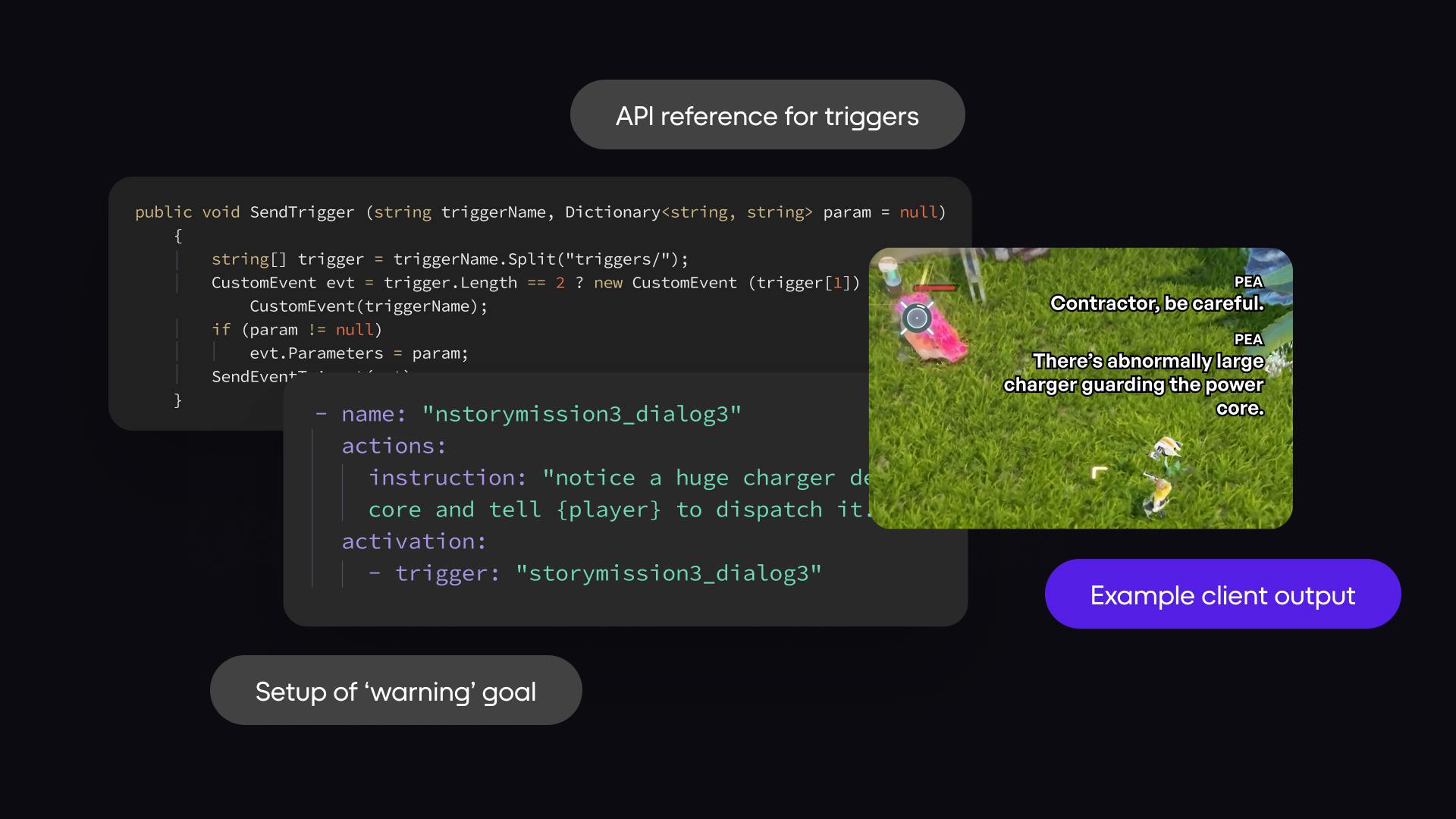

Team Miaozi wanted to implement this in dynamic and innovative ways that would be particularly helpful within an Action RPG. One example of their Goals and Actions implementations include having PEA warn the player about a particularly powerful boss coming up or having her tell the player about an environment when they first enter it.

API Reference for triggers

Another example of Team Miaozi’s Goals and Actions implementation is to allow players to ask PEA what something is when they encounter it. “It’s such a generic question,” said Brian. “With Inworld’s intent recognition, PEA can detect that when a player says this that they’re looking for more information about something in the environment.”

Brian set up a way to pass environment and game parameters to PEA from the client side in real-time so she can almost instantaneously answer that question – and give the player more information about what kind of creature they’re engaging with or even share additional lore about the world. “We wanted to create a truly helpful character,” explained Brian.

Voice commands for game actions

Voice commands

The Cygnus Enterprises team also used Inworld’s Goals and Actions to give PEA the ability to help players after a voice commands.

Team Miaozi created a number of situations where voice commands can be used to control in-game objects, characters, and environments. For example, players can use voice commands to tell PEA to gather resources, shield them, heal them, follow them, provide mission items, or use resources to help build parts of the base.

“Speech-driven gameplay commands are extremely fun,” said Brian. “I think this will open up a lot of new gameplay opportunities. However, every action that AI NPCs take, you do have to program it right now. You can't just say to your character, ‘Hey, do a backflip’ and expect them to do a backflip. But if you set it up, they’ll do it.”

Voice commands are a particularly helpful addition to a top-down shooter Action RPG because it allows the player to focus on gameplay and still get help from their companion when needed. It can also save time. For example, in Cygnus Enterprises, you can ask PEA to gather resources for you as you fend off attacks.

Technical implementation choices

NetEase’s development team wanted to push the envelope on what was possible to do with AI NPCs. Here are the challenges they encountered and the implementation choices they made.

Character awareness

Team Miaozi wanted to ensure that their AI NPCs were aware of their surroundings so they could react to gameplay or respond when a character asked what something was. To do this, they first ensured each unique level or location had its own Scene in Inworld’s Character Engine. This scene contained descriptive information about the environment, such as the type of climate, the presence of mountains or other environmental elements, or the type of creatures that live there. This allowed PEA to have a degree of general awareness of her surroundings.

Their next step was to use their already set-up character triggers and scene triggers within the game to keep PEA apprised of events happening in the game.

“You can send it to every AI NPC on the server side and it's like a silent whisper. They won’t necessarily say anything unless you have a Goals and Action trigger set up that they take an action or say something when an explosion happens, for example,” said Brian. “But you can make sure an NPC knows if there is a group of chargers attacking the player or if there’s an explosion. So, if the player says something about what’s happening around them, the character can respond in context.”

The NetEase team passed this information to the NPCs through parameters and Inworld Scene Triggers which they found relatively easy to do since Inworld is installed in the blueprints.

Misunderstandings

Custom phonemes

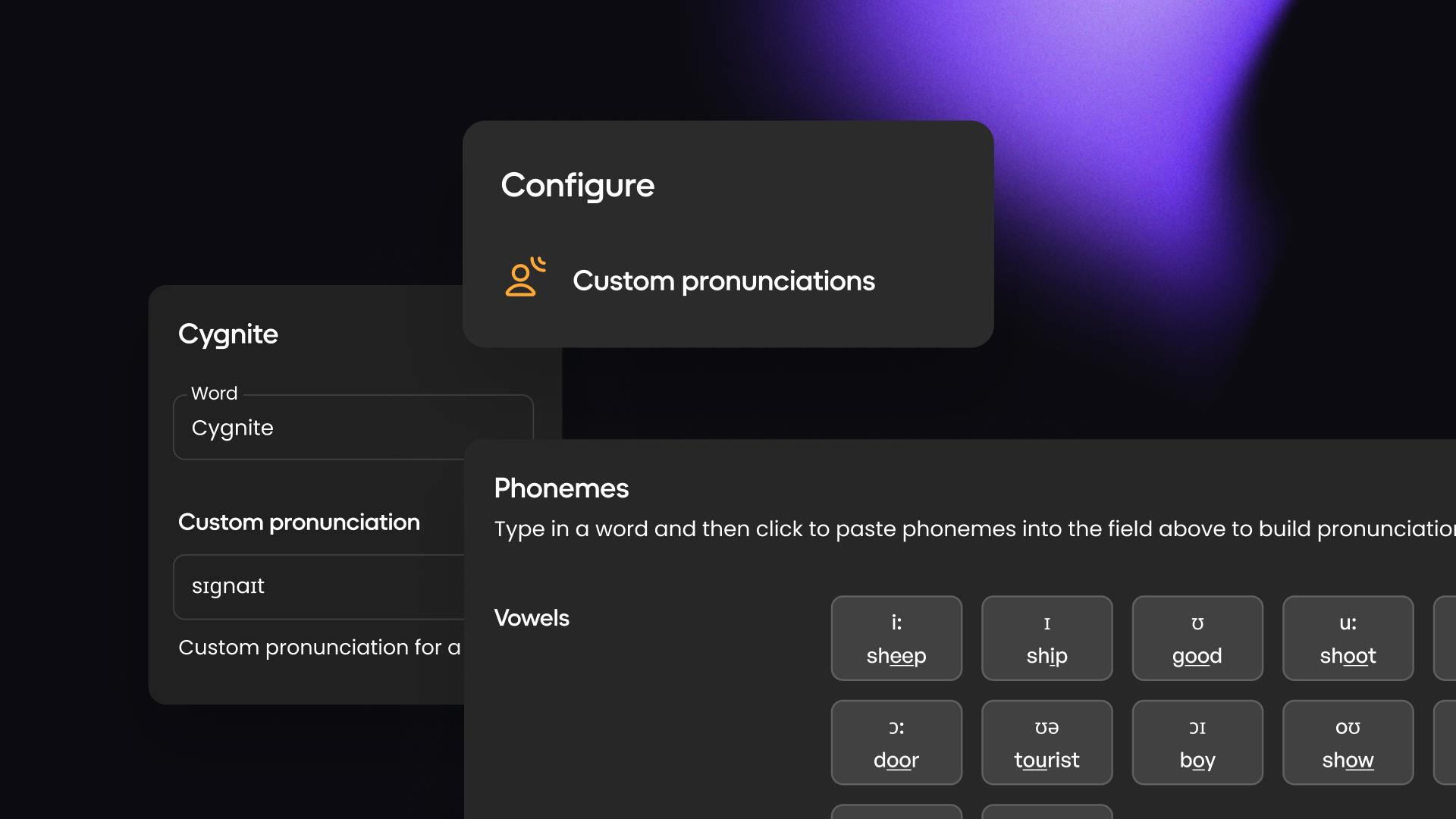

When Team Miaozi first started experimenting with AI NPCs, they quickly ran into problems with the NPCs misunderstanding what the players said. This was due to a number of factors, including speech-to-text misreadings, game-specific terminology that the NPCs couldn’t recognize, and difficulties with speech-to-text software understanding strong accents.

“One of the biggest initial problems was the speech-to-text software would often misunderstand what players said,” said Brian. “This would lead to PEA giving incorrect or inappropriate responses. For example, if a player said ‘I need to go to Dr. Daniel’s office,’ the NPC might misunderstand it as, ‘I need to go to the alpha office,’ and not know how to help you."

Another problem was that the NPCs would sometimes misunderstand game-specific terminology. For example, if a player said "I need you to collect some Cygnite Ore for me," the NPC might misunderstand because it believed you said "I need you to collect some Fulbright cores for me."

This was fixed when Inworld developed a feature that addressed Team Miaozi’s challenges. “They created a feature where you can set up the phonetics for every word, so even if you have a made up word, you can select the vowels for that word and ensure that the character can understand it,” explained Brian.

Nathan sees this as a great example of how Inworld prioritizes its product roadmap according to customer needs. “We also added a feature that increased the likelihood of characters hearing a certain word over another,” Nathan explained.“This helped to prevent the NPCs from misunderstanding words that sounded similar or common game-specific words and names.”

With those new features, Team Miaozi was able to add phonemes that significantly reduced the number of misunderstandings between players and NPCs. This made the game more enjoyable for players and helped to improve the overall user experience.

Conclusion

Team Miaozi was very happy with the results of their custom implementations. “The AI NPCs in our game are much more realistic and engaging,” Brian said.

Despite deciding to add AI NPCs to Cygnus Enterprises after it had already launched, the NetEase team were surprised how easy it was to implement Inworld characters into an existing game.

“Inworld characters can easily be implemented post-launch. Inworld AI works out of the box,” said Brian. “All you have to do is implement the SDK and it will work.” However, the Cygnus Enterprises team’s decision to create a number of custom solutions and implementations was made easier because of how adaptable Inworld’s Unity SDK was. “The SDK is very open and shows most of the code so you can easily make the adaptations that you need. Also, their team is helpful as well if you reach out to them,” he explained.

Here are some tips Team Miaozi has for others interested in implementing custom AI NPCs with Inworld:

- Start with a good understanding of the Inworld AI SDK.

- Plan your customizations carefully.

- Test your customizations thoroughly.

- Be prepared to make changes as needed.

Want to learn more? Read Part 2 in the series where we did a deep dive into the narrative design choices Team Miaozi made around their Inworld implementation.

For more information about why Team Miaozi implemented AI NPCs and the impact they had on player engagement, see our Case Study on Cygnus Enterprises. Ready to integrate Inworld yourself? Get started in our Studio.