Check out what's new:

Speech synthesis: The path to creating expressive text-to-speech

We examine what expressive speech synthesis AI is, how it works, why it’s so hard to do well, and how to benchmark good speech synthesis.

Attempts to synthesize speech started over 200 years ago in St. Petersburg. In 1779, Christian Kratzenstein, a Russian professor, examined the physiological differences between five long vowels and created a machine that could produce them artificially through vibrating reeds and acoustic resonators.

Speech synthesis has come a long way over the years, evolving from the rudimentary speaking machines that inventors like Alexander Graham Bell created in the 1800s to electrical synthesizers that wowed crowds at New York World’s Fair in the 1930s, and finally to text-to-speech (TTS) systems – which saw a breakthrough at the Electrotechnical Laboratory in Japan in the 1960s.

Throughout that 200 year period, the challenge of artificially reproducing speech has intrigued and daunted tens of thousands of inventors and computer scientists, partly because it's a particularly difficult problem to solve. Human speech is an intricate blend of emotion and prosody (ex. pauses, non-linguistic exclamations, breath intakes, speaking style, contextual emphases, and so much more). That expressiveness makes building a speech synthesis model that approximates the complexity of human speech one of the most complex machine learning tasks to attempt – and one that’s riddled with technical obstacles.

However, the application of new machine learning techniques to today’s sophisticated deep learning models, has led to significant progress in the last several years. That’s translated into recent realistic text-to-speech models that are of exceptional quality with the ability to produce speech that more closely resembles human speech patterns than was ever possible before.

But as applications of speech synthesis have increased with the advancements in large language models like GPT-3 and GPT-4, the demand for different kinds of text-to-speech models to fit various use cases have increased.

Research efforts in the last five years have been focused on developing efficient or real-time models for expressive speech synthesis. This work has become a central theme in speech synthesis research because expressive speech is critical for potential applications in customer service, video games, dubbing, audiobooks, content production, and more.

In this post, we’ll examine what expressive speech synthesis is, why it’s so hard to do well, and how to benchmark good speech synthesis.

Interested in speech synthesis models and APIs? Test our model here.

What is expressive speech synthesis?

The Mercenary

Mindfulness Coach

Educational

Fiery Sorceress

Expressive speech synthesis, also often referred to as expressive text-to-speech (TTS), represents a significant advancement in speech synthesis technology. Unlike traditional TTS systems that focus solely on converting text into speech, expressive speech synthesis aims to give synthesized speech human-like tones, emotions, and other characteristics of embodied speech like pauses, non-verbal utterances, and breath intakes.

Neural TTS models have been central to the development of expressive speech synthesis. Synthetic voices, the predecessors of neural voices, were known for their robotic intonations and stilted delivery that people typically associate with TTS. Neural text-to-speech, however, bears a much closer resemblance to real human voices by exhibiting natural flow, appropriate intonation, and nuanced characteristics such as tone, pace, delivery, pitch, and inflection.

How does neural text-to-speech work?

Neural text-to-speech (NTTS) leverages machine learning techniques to train neural networks capable of learning the intricate nuances of human speech and generating outputs that resemble it. Traditionally, text-to-speech systems relied on rule-based or statistical models for speech synthesis. These systems struggled to capture the natural prosody, rhythm, and intonation of human speech because their predefined linguistic and acoustic models followed limited rules-based algorithms. This resulted in outputs that lacked the richness and authenticity of human speech.

In contrast, NTTS models are generative AI models that are trained on vast amounts of speech data to create contextual connections between texts and how those words are spoken. That allows them to produce speech outputs with natural prosody. This shift from rule-based to data-driven approaches has unlocked new possibilities in expressive speech synthesis.

Early neural TTS models faced challenges in producing expressive and emotionally-rich speech. However, advancements in deep learning techniques have enabled neural TTS systems to model the complex dynamics of human speech with greater fidelity. By incorporating emotion-specific acoustic features into neural networks, NTTS systems can modify the tone and pitch of synthesized voices to convey a range of emotions.

Moreover, newer neural text-to-speech models have reduced the data requirements for training, making it easier to develop TTS systems tailored to specific languages or dialects. This has democratized expressive speech synthesis, allowing developers to create TTS solutions that cater to diverse linguistic and cultural contexts.

Factors critical for expressive speech synthesis

While realistic speech synthesis models have made significant progress in recent years, there is still more work to be done in improving the naturalness, expressiveness, and latency of models. That’s because of the many factors expressive speech synthesis models must take into account that collectively contribute to the richness and authenticity of synthesized speech. While each factor plays a crucial role, here are some of the most important.

Prosody

Prosody refers to the rhythm, intonation, stress, and pitch variations in speech. It’s one of the most critical factors in expressive speech synthesis since it conveys emotional nuances, emphasis, and naturalness. Prosody also communicates things like gender, accent, dialect, age, other social signifiers, speaker state, and personality traits – something that is particularly important to capture for entertainment-focused use cases like video games. Capturing prosodic features accurately ensures that synthesized speech sounds lifelike and engaging and the speaker remains in-character.

Key challenges to achieving good prosody include:

- Expressive variation: Capturing expressive variation in speech, including emotions, emphasis, and speaker intentions, presents a prosody challenge for realistic TTS models. Expressive prosody adds richness and authenticity to synthesized speech but requires models to dynamically adjust speech parameters based on contextual cues.

- Linguistic variation: Prosodic patterns can vary significantly across languages, dialects, accents, and speaking styles. Expressive TTS models must be capable of adapting to this linguistic variation to produce natural-sounding speech across diverse contexts. Failure to account for linguistic variation may result in synthesized speech that sounds unnatural or inconsistent.

- Data sparsity: While advancements have been made in recent years around pruning TTS models and achieving prosody transfer via fine tuning on a small amount of voice input, recent research still suggests that large data sets remain helpful in achieving natural prosody on textually complex sentences. However, obtaining such data, especially for underrepresented languages or dialects, can be challenging. Data sparsity may lead to limited coverage of prosodic variability, resulting in less natural-sounding speech.

- Model complexity: Modeling prosody in neural TTS systems requires sophisticated architectures capable of capturing the intricate dynamics of speech rhythm and intonation. For example, a 2023 paper found that there was non single model architecture that was best for prosody prediction and recommended an ensemble of models working together to predict prosody. Designing and training such complex models can be resource intensive.

Some potential strategies to overcome these challenges include techniques like using additional training data, specialized architectures, multi-task learning, autoencoders, fine tuning, and effective evaluation metrics to guide model development.

Contextual awareness

Contextual awareness involves understanding the surrounding context of speech acts – including speaker intentions, discourse structure, and conversational cues. Contextually aware TTS models adapt speech output dynamically based on contextual information in the text, ensuring that synthesized speech is contextually appropriate and coherent.

Key challenges to achieving contextual awareness include:

- Semantic understanding: Expressive TTS models must accurately interpret the semantic content of input text to generate contextually appropriate speech. However, understanding the underlying meaning of text can be challenging, especially in cases of ambiguity, figurative language, or domain-specific terminology.

- Coherence: Maintaining coherence and continuity in synthesized speech across multiple utterances or turns of dialogue is essential for natural-sounding conversation. Expressive TTS models currently struggle to track and integrate contextual information in ongoing conversations to ensure smooth and coherent speech output.

- Adaptation to contextual changes: Expressive TTS models must dynamically adapt to changes in context, such as shifts in topic, speaker roles, or conversational dynamics. Adapting speech output in real-time to reflect evolving contextual cues requires models to be flexible and responsive to events in the conversation.

Some potential strategies to overcome these challenges include context encoding, improving semantic parsing, and contextual vocoding.

Acoustics and voice quality

Acoustic characteristics such as timbre, resonance, and pitch range contribute to the overall quality and realism of synthesized speech. High-quality acoustic modeling is essential for producing natural-sounding speech that closely resembles human voices.

Key challenges to achieving good acoustics include:

- Timbre and resonance: Speech synthesis AI models must accurately capture the timbral characteristics and resonance of human voices, including factors such as vocal tract length, shape, and spectral envelope. Achieving faithful reproduction of timbre and resonance is challenging due to the wide variability and subtle nuances present in natural speech.

- Pitch range and variation: Human voices exhibit a wide range of pitch variation, including changes in pitch level, contour, and modulation. AI speech synthesis models must be capable of producing pitch variations that sound natural and expressive to accurately convey speaker intentions and emotions.

- Articulation and pronunciation: Clear articulation and precise pronunciation are essential for intelligible speech synthesis. Expressive text-to-speech models must accurately model the articulatory movements and phonetic transitions involved in producing speech sounds, ensuring that synthesized speech is clear, crisp, and easily understood.

- Spectral balance and clarity: Maintaining spectral balance and clarity in synthesized speech is crucial for ensuring that speech sounds natural and pleasant to the listener. Realistic text-to-speech models must accurately reproduce the spectral characteristics of human speech, including the distribution of energy across different frequency bands.

Some potential strategies to overcome these challenges include things like using specialized architectures tailored to acoustic modeling and objective evaluation metrics like spectral distortion measures or perceptual quality scores.

Emotional expressiveness

Emotionally expressive speech synthesis involves infusing synthesized speech with various emotional tones, such as happiness, sadness, anger, or excitement. Emotional TTS models can dynamically adjust speech parameters to convey different emotions effectively, thus making the synthesized speech sound more natural.

Key challenges to achieving emotional expressiveness include:

- Emotion recognition: Emotional text-to-speech models must accurately recognize and interpret emotional cues present in input text to generate emotionally expressive speech. However, accurately detecting emotions from text can be challenging as emotions are often conveyed through subtle linguistic cues, tone of voice, and context.

- Emotion representation: Once emotions are recognized, emotional TTS models must effectively represent and encode emotional information to guide the speech synthesis process. Emotions are multifaceted and can vary in intensity, duration, and complexity – making it challenging to capture their richness and diversity in synthesized speech.

- Emotion transfer: Emotional TTS models must be capable of transferring emotional content from input text to synthesized speech in a natural and convincing manner. However, mapping textual emotions to appropriate prosodic features, such as pitch, rhythm, and intonation, requires sophisticated modeling techniques and robust training data.

- Cross-cultural variation: Emotional expression varies across cultures and linguistic communities, posing challenges for emotional text-to-speech models trained on diverse datasets. Models must be capable of adapting to cultural differences in emotional expression to ensure that synthesized speech is contextually appropriate and culturally sensitive.

Some potential strategies to overcome these challenges include things like emotion-targeted training, multi-modal input, prosody modeling, and more.

Speaker adaptation

Speaker adaptation allows expressive TTS models to mimic the unique characteristics of individual speakers' voices. By learning from speaker-specific data, adapted models can produce speech that closely resembles the voices of specific individuals, including accent, intonation, and speech patterns.

Key challenges to achieving good speaker adaptation include:

- Limited training data: Obtaining sufficient speaker-specific data for training realistic TTS models can be challenging, especially for less-represented speakers or languages. Limited training data may result in models that fail to capture the full range of speaker-specific characteristics, leading to less accurate speaker adaptation.

- Speaker variability: Human voices exhibit significant variability in terms of pitch, accent, speech rate, and other acoustic characteristics. Realistic TTS models must be capable of capturing this variability and adapting speech synthesis accordingly to produce speaker-specific output.

- Generalization: Speaker adaptation techniques must enable expressive TTS models to generalize effectively to unseen speakers with similar characteristics. However, adapting to new speakers while retaining the ability to generalize across speakers poses a challenging trade-off in model design and training.

- Overfitting: Overfitting occurs when a model learns to memorize speaker-specific characteristics from training data rather than capturing generalizable patterns. Speaker adaptation techniques must mitigate the risk of overfitting by balancing speaker-specific adaptation with generalization to unseen speakers.

Some potential strategies to overcome these challenges include things like transfer learning, scaling up the dataset, fine-grained adaptation techniques, and more.

How do you benchmark expressive speech synthesis?

Evaluating the quality of speech is complicated. Conventional evaluations of Text-to-Speech (TTS) model performance often rely on Mean-Opinion Score (MOS), where human raters assess voice quality on a scale of 1 to 5. However, this method is expensive, slow, and impractical for frequent model and data adjustments during model training.

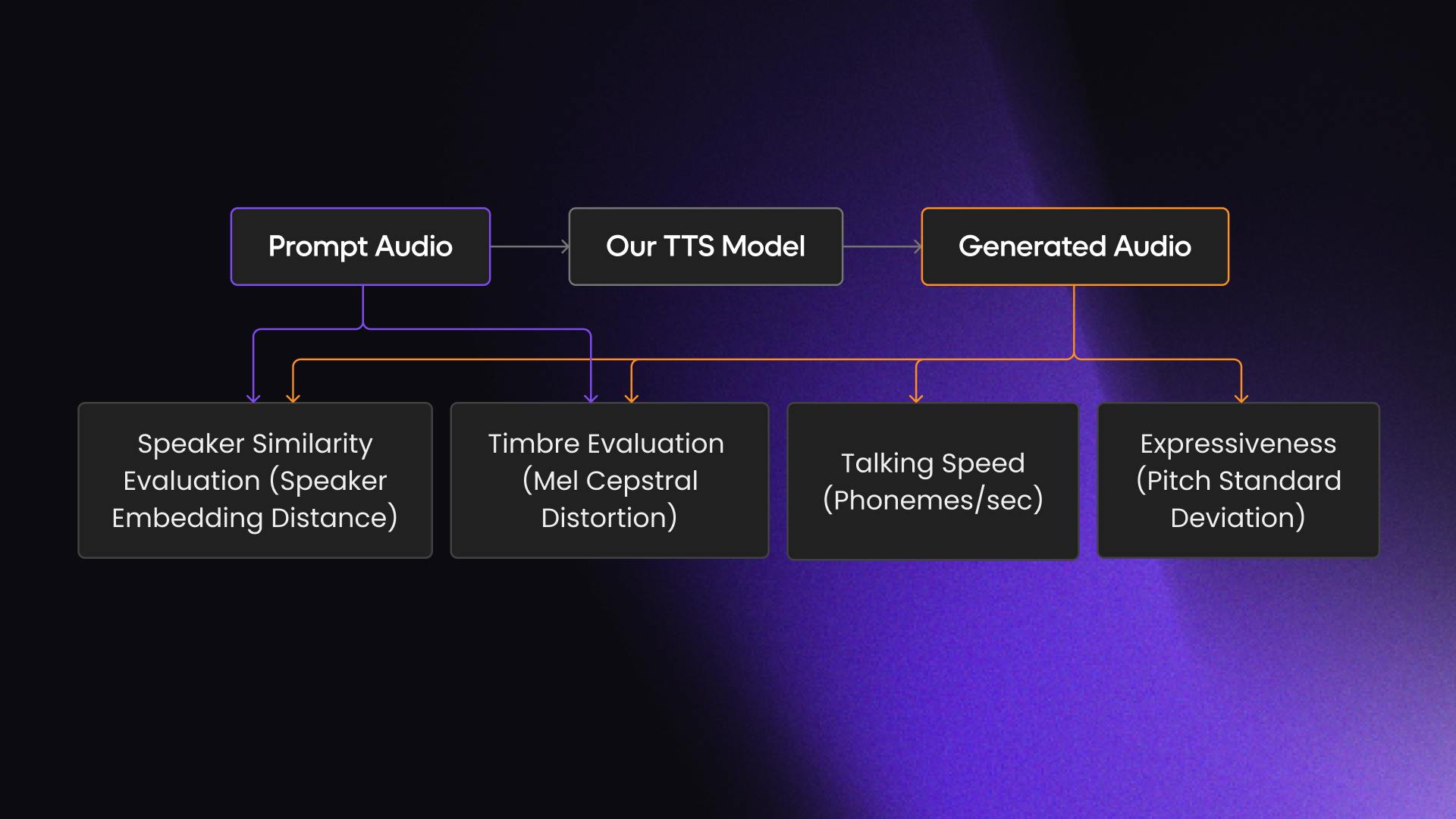

At Inworld, we developed an automated evaluation framework evaluating prompt and generated voice quality across five key factors to allow for faster iteration on our model:

- Text generation accuracy: Our model's text generation accuracy underwent scrutiny by comparing generated text against the original using an Automatic Speech Recognition (ASR) model.

- Speaker similarity evaluation: Evaluating voice similarity between the generation and prompt involved a speaker verification model. Leveraging pre-trained models that convert the audio generated into embedding representations, we computed cosine similarity between audio representations, with closer embeddings indicating higher voice similarity.

- Timbre evaluation: The Mel Cepstral Distortion (MCD) metric helped us quantify the spectral similarity between synthesized speech and a reference sample. It calculates the average Euclidean distance between their Mel-frequency cepstral coefficients (MFCCs). The MFCC representation characterizes the timbre of speech, the quality that makes voices sound different even when at the same pitch. A lower MCD value indicates a closer match between the synthesized and reference samples.

- Talking speed: Quantifying phonemes per second served as a reliable indicator of speech naturalness, ensuring voices avoided sounding excessively slow or robotic. Striking a balance in phonemes per second helped maintain optimal tempo and pace while retaining naturalness.

- Expressiveness: To gauge expressiveness, we employed Pitch Estimating Neural Networks (PENN) to measure pitch variation in generated audio. Analyzing standard deviation of pitch aimed to optimize for natural levels of variation, steering clear of monotone voices and aligning with expressive speech patterns.

By adopting this comprehensive framework, our goal was to expedite and streamline the assessment process and enable the efficient refinement of TTS models with enhanced voice quality.

Inworld’s advances in speech synthesis

Podcast Host

D&D Narrator

Companion Character

Despite the challenges that speech synthesis AI models face in generating expressive and lifelike speech, the rate at which models have improved and become more efficient over the last few years is exciting.

At Inworld, we've made significant advancements in reducing latency for both our Inworld Studio and standalone voices, giving our customers more options to generate natural-sounding conversations for their real-time Inworld projects or for use as a standalone API.

Inworld’s speech synthesis model offers high-fidelity AI voice generation powered by AI models that add realism with lifelike rhythm, intonation, expressiveness, and tone – while also achieving extremely low latency. Our new voices also have a 250ms end-to-end 50pct latency for approximately 6 seconds of audio generation.

The current state of our TTS model and of expressive TTS technology more broadly opens up exciting opportunities for its use in applications like gaming, audiobooks, voiceovers, customer service, content creation, podcasts, AI assistants, and more. With the pace of recent developments, expect the output of TTS to soon be nearly indistinguishable from real voices.

Want to hear more Inworld’s TTS voices?