Inworld Runtime

The best way to build and optimize realtime conversational AI and voice agents

Lightning-fast with integrated telemetry and A/B testing.

Why Inworld Runtime

Inworld Runtime gives you the best quality, availability and speed

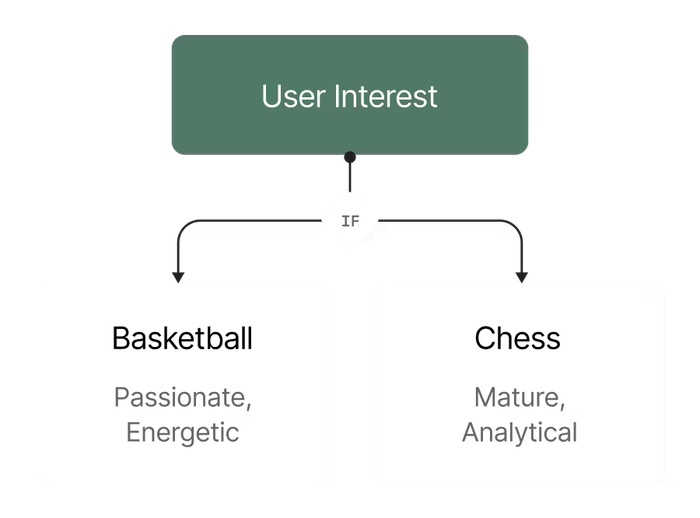

Exceptional Quality

Serve personalized models and prompts to delight every user.

High Avaliability

Automatic failovers prevent downtime from outages and rate limits.

Ultra Low Latency

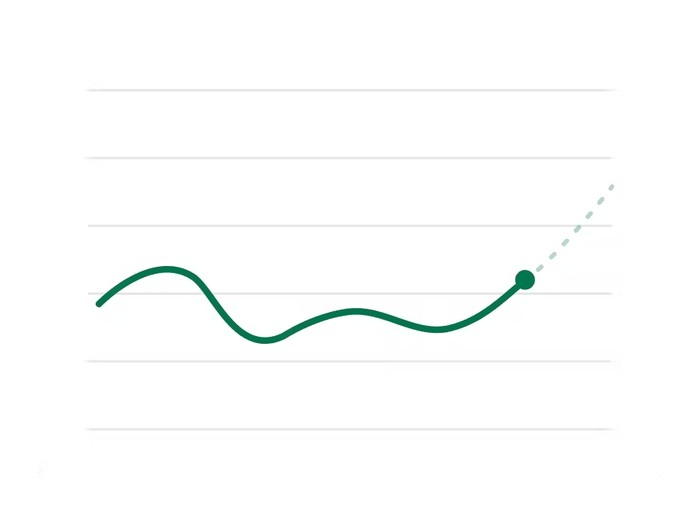

Lightning-fast execution that scales seamlessly from 10 to 10M users with minimum code changes.

Proven Results

Built for every consumer AI application - from social apps and games to learning and wellness.

Bible Chat

Increased voice AI feature engagement and reached millions

Built for every consumer AI application

Runtime scales consumer AI, driving experiences from social apps and games to learning and wellness.

Gaming & Interactive Media

Dynamic NPCs, interactive storytelling, and immersive experiences powered by AI that scales from indie games to AAA studios

Get started

Inworld easily integrates with any existing stack or provider (Anthropic, Google, Mistral, OpenAI, etc.) via one API key.

Available to everyone now.

FAQ

How is Runtime different from other AI backends?

The Inworld Runtime uniquely combines

- lightning-fast C++ core for realtime multimodal conversational interactions.

- built-in telemetry for deep user insights (traces & logs).

- live A/B testing to your accelerate improvements the end user experience.

Is Runtime free?

Yes, Runtime is free. Consumption of models is the only thing you pay for. Runtime itself incurs no cost or license fee. Learn more about model pricing here.

How do I get started?

Follow our quick start guide to deploy a realtime conversational AI endpoint in 3 minutes - Then integrate into your app.

Who is Inworld Runtime designed for?

Inworld Runtime is specifically designed for developers building realtime conversational AI and voice agents that scale to millions of concurrent users.

Use cases include language tutors, social media, AI companions, game characters, fitness coaches, social media, shopping agents, and more.

Can Inworld Runtime work with my existing framework and stack?

Yes, you can use the Inworld CLI to deploy a hosted endpoint that can be easily called by any part of your existing stack.

What production-ready building blocks does Inworld Runtime provide?

Developers get a full suite of pre-optimized nodes to construct any real-time AI pipeline that can scale to millions of users, including nodes for

- model I/O (STT, LLM, TTS)

- data engineering (prompt building, chunking)

- flow logic (keyword matching, safety)

- external tool calls (MCP integrations

- and more